Low Cost, Low Threshold, High Efficiency, High Security

One-stop Generative AI Solution to Help Enterprises Build Exclusive Large Models.

What is AFS?

The innovative model of Taiwan’s semiconductor industry, particularly in wafer foundry services, has driven the global IC design sector and the overall semiconductor and technology industry ecosystem to decades of vigorous growth. Now, generative AI is igniting a productivity revolution and signaling the arrival of the era of Moore’s Law for artificial intelligence systems!

Taiwan AI Cloud launched AFS (AI Foundry Service) to help enterprises efficiently build exclusive large language models through AIHPC high-speed computing power: In the AI model training (optimization) stage, it provides the “Formosa Foundation Model, FFM” with Traditional Chinese enhancement. Enterprises just need to prepare data before starting large model training, which effectively saves enterprises time and cost for the initial setup; in the AI model inference (deployment) stage, in addition to “cloud” deployment services, it also provides the market’s only integrated hardware and software “on-premises” deployment solution.

From ChatGPT to IndustrialGPT, AFS is the enterprise’s AI foundry to help efficiently build dedicated models that align with corporate culture and real needs, models that are unique, and practical which can ensure high-security requirements for enterprise data security, compliance, and privacy, and can be safely connected to the enterprise’s internal systems. With the advantages of low cost and low threshold, it is specially designed to empower enterprises’ generative AI applications and deployment.

AFS Features and Advantages

From Optimization to Deployment With Confidentiality & Exclusive Large Models that ChatGPT Cannot Achieve

Pre-trained “FFM” integration with 176 Billion Parameters.

A solid foundation for enterprises to build applications without starting from scratch.

Low Cost

A supercomputer environment with pay-as-you-go billing for you to scale according to your usage, from just thousands to tens of thousands of dollars, in which you can quickly deploy enterprise-grade language model optimization services.

Help enterprises effectively control costs, avoid waste, and save huge setup costs, reducing development risks and investment in hardware equipment and manpower.

Low Threshold

Llama3-FFM and FFM-Mistral traditional Chinese LLM and cloud and local model deployment services enable enterprises to build models without starting from scratch, also, the capabilities in traditional Chinese semantic understanding and text generation are more practical.

Help enterprises quickly get started develop their own large models, and choose appropriate solutions based on their respective scales and needs.Llama3-FFM

High Efficiency

Based on AIHPC high-speed computing power and a no-code platform, optimization of 100 million tokens for enterprises can be completed in as little as 6 hours, while a single 176B training can handle up to 2.1 billion tokens.

Assist enterprises to accelerate the training and deployment of exclusive large models, save optimization costs, and enhance enterprise efficiency.

High Security

It meets the requirements for enterprise cybersecurity, SLA, maintenance services, compliance and auditability, can be commercially authorized, adopts a tenant isolation system, and provides the only deployment solution in the market that meets various local cybersecurity needs.

Effectively ensures the confidentiality of sensitive data and record privacy for enterprise users while reducing cybersecurity risks and providing secure protection.

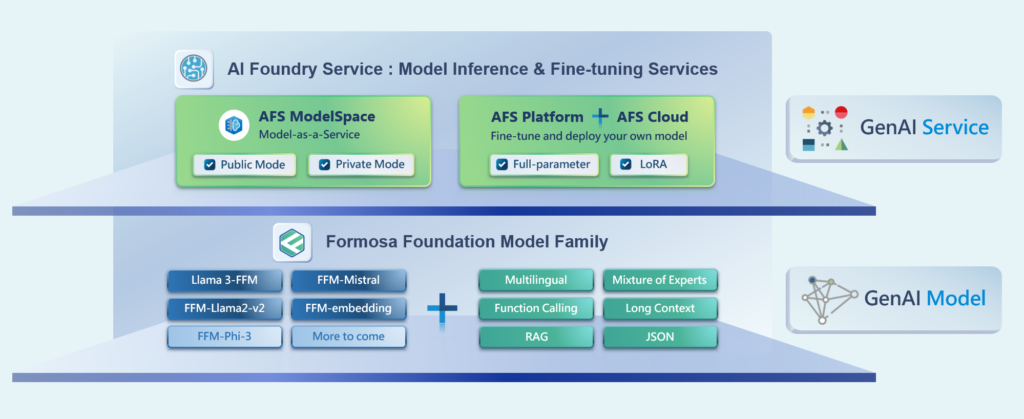

AFS One-stop GenAI Solution

Assist enterprises in optimizing and deploying models in a high-efficiency, high-security environment without cost billions in building your own AIHPC system and pre-training large models.

Various Open-Source LLMsCloud Deployment Solution

AFS ModelSpace

Provide enterprises with a variety of open source large language models and flexibility of allocating computing resources.

Fine-tune Solutionfor Enterprise LLM

AFS Platform

Provide enterprises with the “lowest cost” and “highest efficiency” large language model training environment.

Deployment Solution for Enterprise LLM

AFS Cloud

Offer flexible deployment solutions for enterprises, pay as you go to eliminate unnecessary costs.

AFS Application Cases

Start building enterprise-specific large language models with AFS.

Connect External Data

Enterprise Knowledge Management

Numerical Predictions for the Future

Application Cases Reference

Inference Service API

AI Foundry Service x AIHPC Computing Power

On-premises LLM Solution

Limited Availability ‧ Apply Now

FAQ

AI Foundry Service (AFS) of Taiwan AI Cloud is a comprehensive enterprise-level generative AI solution, providing an end-to-end service from large language model optimization to inference cloud/on-premise deployment. This includes the “AFS Platform+AFS Cloud” for helping enterprises fine-tune proprietary models and establish inference services, and the “AFS ModelSpace” which offers various Traditional Chinese enhanced Formosa Foundation Models (FFM) and reliable open-source models for quick cloud inference. All these services can be operated using a No-code platform.

If users need to establish proprietary models, please quickly select FFM base models and high-performance throughput computing configurations through the “AFS Platform” and complete the fine-tuning training of hundreds of millions of tokens within a few hours. Simultaneously, the “AFS Cloud” automatically creates inference service endpoints and independent API URLs, allowing for enterprise generative AI application integration and Chat/Playground testing.

For enterprises that do not need model fine-tuning, please choose the latest FFM or open-source models on “AFS ModelSpace” and directly call APIs or perform inference tasks via Chat/Playground. “AFS ModelSpace” also offers different billing modes, by the number of tokens used (by tokens) or by model usage in GPU hours.

1. AFS Platform+AFS Cloud: Provides a billing mode based on “model building time”, with costs varying according to the selected base model.

2. AFS ModelSpace: Provides dual billing modes based on “number of tokens used” and “model building time”, with costs varying according to the selected base model.

For more information, please refer to the AFS Pricing.

1. For users who need to establish proprietary large models, please choose “AFS Platform+AFS Cloud” to help fine-tune proprietary models and establish inference services.

2. For users without model fine-tuning needs, please choose “AFS ModelSpace” to interact with various FFMs and open-source large models in real-time.

3. For users with sensitive data and security compliance needs, please choose to deploy proprietary large models on-premises in enterprise data centers/private clouds with the “FFM Licensing”.

For more information, please refer to the AFS Services.

The AI Foundry Service (AFS) of Taiwan AI Cloud is tailored for enterprise users, meeting their security, SLA, maintenance, and compliance needs. AFS offers a full range of services, including POC verification, customized model optimization (Fine-tuning), hybrid on-premise and cloud model deployment, and inference. Users can choose services based on actual needs. Rest assured, Taiwan AI Cloud does not retain any customer data or records from using AFS services or any product interactions, nor does it use them as training data for FFM.

Additionally, all AFS services are built under national information security regulations and hosted in non-government A-grade computing facilities, ensuring that user services and data meet the highest security standards. For higher sensitivity and regulatory requirements, users can choose Taiwan AI Cloud’s “FFM Licensing” to deploy proprietary models on-premises, ensuring no internal data leakage.

If the specific internal and external data volume in a particular field for an enterprise exceeds 1 billion tokens, it is best to use a two-stage optimization training with Pretrain-SFT to establish the best enterprise-specific domain model. Adding a new dictionary that includes enterprise-specific domain knowledge, such as patent translations and specialized lexicons, ensures that certain terms correspond to specific translations or domain-specific meanings. Building a specialized dictionary during the pre-training stage effectively integrates this new dictionary into the model.

If insufficient data is available for pre-training, SFT can still be conducted directly. Still, the SFT requires at least 270,000 tokens (for partial parameter fine-tuning) to 570,000 tokens (for full parameter fine-tuning).

The QA pair data set should be in jsonl format, with each data entry having a total token count not exceeding 4096.

The Formosa Foundation Model (FFM) is a Traditional Chinese enhanced large language model (LLM) developed by Taiwan AI Cloud based on LLM technology. The technical R&D team of Taiwan AI Cloud, leveraging years of NLP experience, has enhanced the performance of pre-trained models using LLM technology. It demonstrates high-quality understanding and performance in Traditional Chinese semantics and knowledge domains while maintaining the capabilities of the native model. Tailored to meet the diverse application needs of Taiwanese enterprises in different fields, FFM provides high-quality text generation results and possesses comprehensive world knowledge and multilingual capabilities.

The FFM model series offers Traditional Chinese enhanced versions of various open-source models, from BLOOM, Llama2, and Mistral to the latest Llama3. In terms of model specifications, it provides the most complete parameter configurations for each model, including BLOOM-176B, Mixtral-8x7B, Llama3-70B, and more. The Phi-3 version of FFM is also under development, so stay tuned.

In the rapidly evolving LLM application needs, Taiwan AI Cloud is committed to providing the latest, most complete, diverse, and high-quality large language model specifications and application services to accelerate enterprise AI 2.0 implementation.

Yes, it supports. Through the customization method provided by LangChain (please refer to the following link: https://python.langchain.com/en/latest/modules/models/llms/examples/custom_llm.html), application system developers can write custom LLM wrappers according to the manual and specify the location of the deployed FFM.

Welcome you to register as a Taiwan AI Cloud member to enable the “Experience Project Application” and receive 10,000 credits for 60 days of free use, quickly experiencing “AFS ModelSpace” and other cloud platform products. For more details, please refer to the free trial project description.

Create an account to enjoy 60 days of experience for 10,000 credits for free now! Sign up here.

If you need further assistance, please contact us via sales@twsc.io or https://tws.twcc.ai/contact-us/

OpenAI’s GPTs and its GPT marketplace/store primarily target individual users, aiming to create personal GPT services. In contrast, Taiwan AI Cloud mainly provides enterprise-level users with reliable and controllable open-source large language models and generative AI solutions, while also offering on-premises inference services that meet enterprise compliance, security, and audit requirements.

The main differences are as follows:

1. Nocode Platform – Taiwan AI Cloud offers no-code model training, fine-tuning, and inference services.

AFS is a complete no-code platform, allowing an easy and quick start from model training to inference deployment since NVIDIA provides the NeMo development framework and DGX Cloud, which requires writing jobs and related programs.

2. Trusted Open-Source Large Language Models – Taiwan AI Cloud provides trusted open-source large language model solutions with local Taiwanese knowledge and enhanced Traditional Chinese corpus.

FFM is a large language model trained with local Taiwanese knowledge and enhanced Traditional Chinese corpus since NVIDIA’s models are pre-trained models mainly supporting English tasks without enhancement for Taiwanese knowledge and Traditional Chinese corpus.

3. Pricing Model – Taiwan AI Cloud provides different pricing models for model fine-tuning and inference service deployment based on the selected services.

Using NVIDIA AI Foundry Service requires paying for the NVIDIA AI Enterprise Software License and cloud deployment infrastructure costs. Additionally, using DGX Cloud incurs high monthly usage fees.

「AFS (AI Foundry Service)」提供一站式整合服務,協助企業開發專屬的企業級生成式AI大模型

立即填表諮詢

Free Consultation Service

Contact our experts to learn more and get started with the best solution for you.